Previously, I discussed the use of Bayesian modelling for appliance signature matching. Following Bayes rule, the posterior is proportional to the product of the prior and the evidence:

where:

- X = candidate appliance cycle corresponding to actual appliance cycle

- t = time between known previous appliance cycle and candidate appliance cycle

The prior, P(X), will be calculated using a combination of smoothness metrics. The evidence, P(t|X), will be calculated using the known probability distribution of times between appliance cycles. These are explained respectively below.

Prior: P(X)

So far, I have proposed three metrics for assigning a confidence value to a possible appliance cycle. When defining them, I'll use the following notation:

- P = Aggregate power

- A = Appliance power

- Q = P - A

- t = time instant from 1 to T

1DERIV:

Difference between the sum over the first derivative of the aggregate power and the first derivative of the aggregate-appliance power:

2DERIV:

Difference between the sum over the second derivative of the aggregate power and the second derivative of the aggregate-appliance power:

ENERGYRATIO:

Ratio of the energy consumed by the appliance during its cycle and the energy consumed by all other appliance's during the cycle:

where:

- a = time instant of cycle start

- b = time instant of cycle end

Evidence: P(t|X)

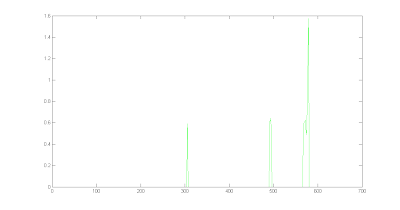

We know the probability distribution of the time between appliance cycles. This is shown by the histogram below:

This can be modelled using a normal distribution of mean and standard deviation calculated from this data set. This model will be used to calculate the evidence probability.

Posterior: P(X|T)

I have calculated the posterior using two combinations of smoothness metrics to form the prior:

- threshold(1DERIV) * 2DERIV

- threshold(1DERIV) * ENERGYRATIO

In both cases, the 1DERIV metric has been used to calculate whether subtracting the appliance signature had smoothed the aggregate power array. A threshold was applied to these smoothness values to produce a list of candidates for which the subtraction increased the smoothness of the array. Next, the candidates were multiplied by a confidence metric. In the first case the 2DERIV metric was used, and in the second case the ENERGYRATIO metric was used.

Given a known appliance cycle and a number of candidates for the immediately following cycle, the posterior probability for each candidate cycle was calculated. The maximum likelihood candidate cycle was selected as a correct cycle and the process was repeated sequentially for the remaining cycles.

The two plots below show the estimated fridge cycles (blue) against actual fridge cycles (red).

1. threshold(1DERIV) * 2DERIV

2. threshold(1DERIV) * ENERGYRATIO

Both estimations worked well, correctly detecting 23 and 24 cycles respectively, out of 31 actual cycles. Each method actually only generated 30 positives, of which 7 and 6 were false positives respectively. There was a common interval in which neither approach generated a true or false positive, occurring at a time when the off duration was at a minimum. The use of a model with an off duration that varies over time might increase the performance of both approaches here.

In addition, it is interesting to note that there are some areas where approach 1 outperforms approach 2, and other areas where approach 2 outperforms approach 1. This is encouraging, as it could mean that the two approaches are complementary. I investigated using a third approach in which the confidence metric was the product of 2DERIV and ENERGYRATIO, although the result was less accurate than either individual approach.